Open Source H2O-Danube2-1.8B

NEWS FLASH

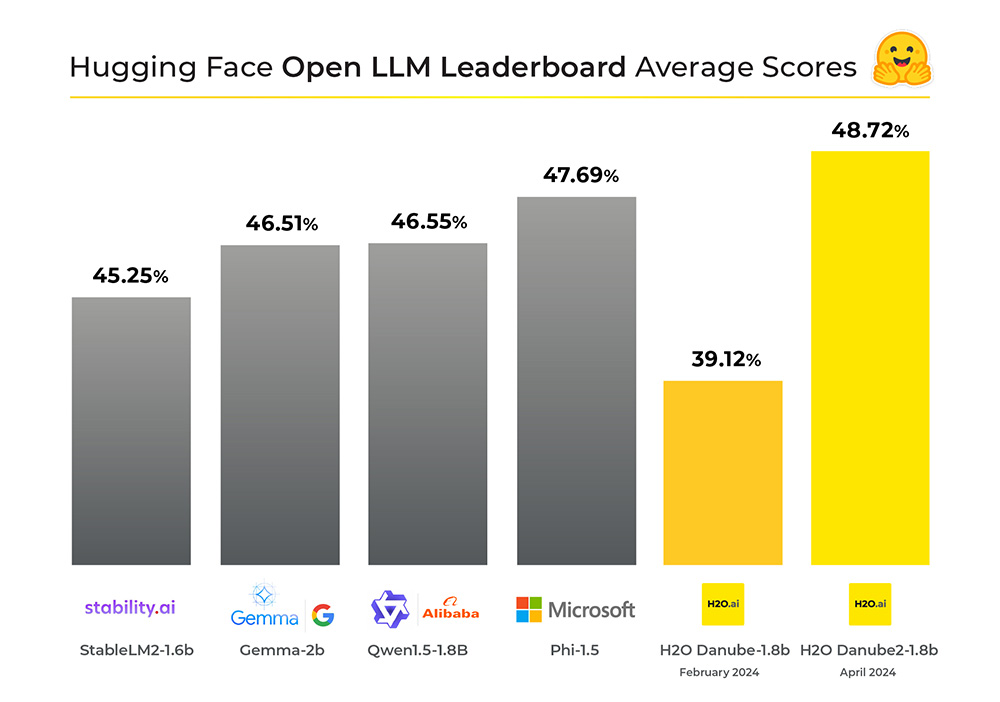

#1 Foundation Model on Hugging Face Open LLM Leaderboard for the <2B Range

H2O-Danube2-1.8B is built upon the success of its predecessor, H2O-Danube 1.8B, with notable upgrades and optimizations that have propelled it to the forefront in the 2B SLM category. Leveraging a vast dataset of 2 trillion high-quality tokens, this model builds upon the Mistral architecture and optimizations, such as dropping windowing attention, to deliver unparalleled performance in natural language processing tasks.

H2O-Danube2-1.8B has secured the top position on the Hugging Face Open LLM Leaderboard for the <2B range, surpassing Microsoft, Alibaba and even the much larger Gemma-2B model from Google in the 2.5B parameter category.